Developing Airflow Locally with Docker and Makefile

Discover a guide to developing Apache Airflow workloads locally with Docker and a template repository for efficient, consistent development.

One of the common questions that I get as a Data Consultant is, “How do I manage connections across multiple environments when developing Airflow DAGs?”. This question represents a prevalent problem because most companies have replicas of Data Lakes, File Shares, Databases, or even Airflow itself. These replicas are typically used for Testing but also might exist for redundancy. Regardless, the targeted replica usually changes as code gets promoted (usually via a CICD process).

To illustrate the problem further, let’s use the example of our Data Engineer, Bob. Bob is developing Airflow DAGs on his laptop but plans to ship the DAG code to a production Airflow environment. When Bob runs his DAG locally, it orchestrates SQL queries against a Development Redshift Cluster. However, when Bob finishes development on his DAGs and his code gets promoted into a Production Airflow environment, the DAG should execute the same queries against the company’s Production Redshift Cluster.

With this main problem in mind, we can infer that there are two connection strings that Bob needs to provide to Airflow. Bob’s local Airflow will need to access the Development Redshift Cluster, and we’ll pretend that the URI string to connect to that cluster is:

redshift://username:password123@dev-redshift-server.123456789101.us-east-1.redshift-serverless.amazonaws.com:5439/dev

The second connection string is to the Production Database, which the Production Airflow environment will use once Bob promotes his code, and we’ll pretend that the URI string to connect to that cluster is:

redshift://username:password456@prod-redshift-server.123456789101.us-east-1.redshift-serverless.amazonaws.com:5439/prod

The slickest possible solution to this problem lies in using a Secrets Backend. Airflow supports many secret backends, including:

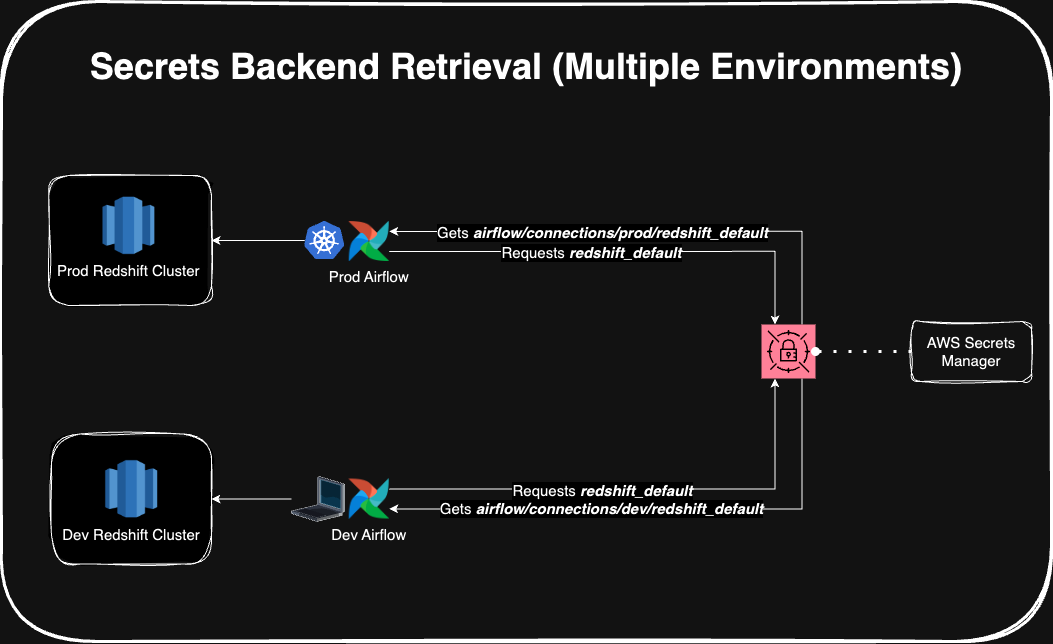

When configuring a secrets backend for Airflow, you must specify keyword argument prefixes for Airflow Variables and Airflow Connections. The prefix helps Airflow to resolve whether to treat the Secret as a Variable or a Connection. However, we can modify the prefixes to separate environment-specific items.

In this blog post, we’ll pretend that Bob is an AWS customer using Secrets Manager. However, implementing the solution on other secret backend options should be close to the same (if not identical). The end result will look something like this:

So, in Bob’s DAG, he wont have to worry about resolving various connection IDs when promoting code from dev to prod because the environment will automatically serve the correct connection URI (based on the prefix in it’s configuration). For example:

BOB DOESN’T DO THIS ❌

from datetime import datetime

from airflow.models import DAG

from airflow.providers.amazon.aws.operators.redshift_sql import RedshiftSQLOperator

if os.getenv('ENVIRONMENT') == 'PROD':

redshift_conn_id = 'redshift_production'

else:

redshift_conn_id = 'redshift_development'

with DAG(

dag_id=f"example_dag_redshift",

...

):

t = RedshiftSQLOperator(

task_id='run_redshift_query',

...,

conn_id=redshift_conn_id

)

BOB DOES THIS! 😎

from datetime import datetime

from airflow.models import DAG

from airflow.providers.amazon.aws.operators.redshift_sql import RedshiftSQLOperator

with DAG(

dag_id=f"example_dag_redshift",

...

):

t = RedshiftSQLOperator(

task_id='run_redshift_query',

...,

conn_id="redshift_default"

# when run locally: uses the airflow/connection/dev/redshift_default secret

# when run in prod: uses the airflow/connections/prod/redshift_default secret

)

So, for Bob to implement this solution, he is going to:

airflow/connections/dev/redshift_defaultairflow/connections/prod/redshift_defaultairflow_dev

AKIACMHDEHA8HE1SHAHSceIAxLQ9sw3eE+L3Sqf1HZPBuN2Y2pW1/QepNiMX {

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"secretsmanager:GetRandomPassword",

"secretsmanager:ListSecrets"

],

"Resource": "*"

},

{

"Sid": "VisualEditor1",

"Effect": "Allow",

"Action": "secretsmanager:*",

"Resource": [

"arn:aws:secretsmanager:us-east-1:123456789101:secret:airflow/connections/dev/*",

"arn:aws:secretsmanager:us-east-1:123456789101:secret:airflow/variables/dev/*"

]

}

]

}

airflow_prod

AKIALNIEABQ9BQ2ONXTVeqKNmOP7jl6sS+P5Upa2LKGMqL4N3pC3/EhqLkQV {

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"secretsmanager:GetRandomPassword",

"secretsmanager:ListSecrets"

],

"Resource": "*"

},

{

"Sid": "VisualEditor1",

"Effect": "Allow",

"Action": "secretsmanager:*",

"Resource": [

"arn:aws:secretsmanager:us-east-1:123456789101:secret:airflow/connections/prod/*",

"arn:aws:secretsmanager:us-east-1:123456789101:secret:airflow/variables/prod/*"

]

}

]

}

Add the following configuration to his local Airflow:

AIRFLOW__SECRETS__BACKEND=airflow.providers.amazon.aws.secrets.secrets_manager.SecretsManagerBackend

AIRFLOW__SECRETS__BACKEND_KWARGS={"connections_prefix": "airflow/connections/dev", "variables_prefix": "airflow/variables/dev"}

AWS_DEFAULT_REGION=us-east-1

AWS_ACCESS_KEY_ID=AKIACMHDEHA8HE1SHAHS

AWS_SECRET_ACCESS_KEY=ceIAxLQ9sw3eE+L3Sqf1HZPBuN2Y2pW1/QepNiMX

Add the following configuration to his production Airflow:

AIRFLOW__SECRETS__BACKEND=airflow.providers.amazon.aws.secrets.secrets_manager.SecretsManagerBackend

AIRFLOW__SECRETS__BACKEND_KWARGS={"connections_prefix": "airflow/connections/prod", "variables_prefix": "airflow/variables/prod"}

AWS_DEFAULT_REGION=us-east-1

AWS_ACCESS_KEY_ID=AKIALNIEABQ9BQ2ONXTV

AWS_SECRET_ACCESS_KEY=eqKNmOP7jl6sS+P5Upa2LKGMqL4N3pC3/EhqLkQV

If you are looking to improve your Airflow local development workflow or are in search of other Data Consultancy services for your business, please reach out to us on Discord or submit a service request here.